DISCREDITING Their Fraudulent, Ai Created Digital History with AUTHENTIC Academic Papers

Retrieved from the personal website of Hao Li - USC-ICT Researcher and CEO of Pinscreen.com

I found Hao Li’s personal website after digging into the ‘Rearden LLC v Disney’ case which is an ongoing federal patent lawsuit taking place in the Northern District of California Federal court - with the last docket entry #777 being submitted into the record on December 17th, 2024.

The entire case can be viewed at the following link -

https://www.courtlistener.com/docket/6121204/rearden-llc-v-the-walt-disney-company/?page=1

This is a huge find, as these academic papers I downloaded from Hao Li’s personal website are all AUTHENTIC - meaning that they completely discredit Paul Debevec’s fraudulent 2006 academic papers and the completely false digital history that these incompetent fools shat out across the entire internet. Take note of their ‘secret’ Freemasonic symbology scattered throughout these fake papers for the purpose of letting all of their other buddies know who is behind their failed fraud. I’ve thoroughly exposed that as well - just like everything else.

These people are not ‘powerful’ - they are bumbling, fucking idiots. They have all of the money, technology, manpower, and resources at their complete control, yet I have single handedly exposed the entire, failed ‘operation’ they’ve been waging against me.

The most important point of all of this is that I have ALWAYS been willing to simply sell them everything from the first moment I found the fraudulent, duplicate Netflix patent application, that was filed just 12 days after I filed my provisional application (stolen directly out of the USPTO - at which point I became the target of an illegal surveillance operation courtesy of our incompetent, supremacist, technocratic, government overlords..)

Their fake, Ai created digital history includes not only fake academic papers that were published to accredited academic publishing sites, but also completely fabricated digital patent records - meaning that this criminal conspiracy directly involves the USPTO itself.

Fraudulent USPTO Patent documents -

These supposed 2006 research papers DO NOT EXIST AT ALL in the 75 AUTHENTIC research papers I downloaded from Hao Li’s personal website = Hao Li hasn’t been let in on the fraud taking place, or at least didn’t get the memo for whatever reason.

A Ctrl + F search of the 75 academic research papers (I combined all of them into two separate grouped PDF documents that contain PDF’s 01-37 and 38-75 —-below) will reveal that these supposed papers DO NOT EXIST in any of these authentic academic papers. The term ‘Multiplexed’ doesn’t appear once, and the author ‘Einarsson’ NEVER appears in any of the papers either…

The ‘MOVA Contour’ is the patented technology that has now been falsely accredited to Paul Debevec, USC, and their fraudulently created historical record of ‘Light Stage 6’.

Keep in mind that I didn’t file my provisional patent application until March 19, 2021 - that means these public court records, exhibits, and declarations that all occurred prior to the filing of my provisional patent reveal the TRUTH - the one which they have been trying their best to cover up by simply producing more fraudulent content to support their LIES.

Perhaps that’s why the webpage for Disney’s ‘MEDUSA Facial Capture’ system no longer exists? - https://studios.disneyresearch.com/medusa/

And perhaps that’s why the project page listed on ILM’s website for ‘The Hulk’ is strangely empty? - https://www.ilm.com/vfx/the-hulk/

This is because they have re-written digital history with their criminal friends at YouTube / Google, along with all of their psychopath, genocide supporting friends scattered throughout the military-entertainment industrial complex they control - with every single bit of evidence I’ve established all leading back to Israel (more about this later though…)

Exhibit AF - Detailing their Ai Created False History and Netflix Fraud

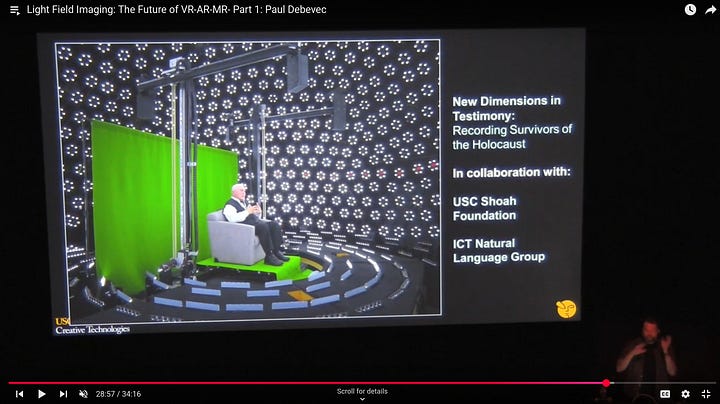

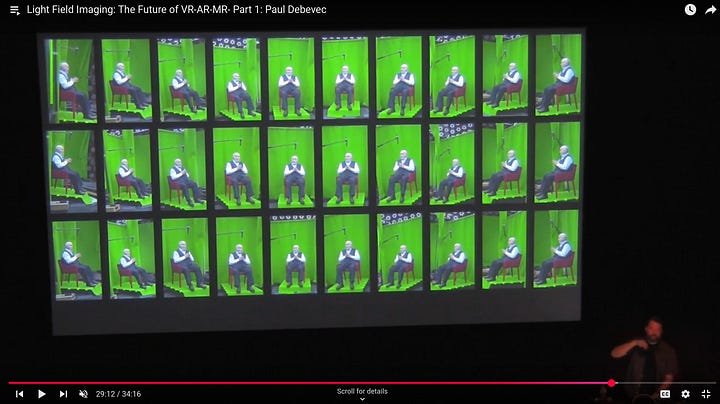

This includes their fake, Ai generated holographic holocaust survivor, that they created with direct support from the USC Shoah Foundation (Steven Spielberg..), along with the completely false historical record they have attributed to a little fat-faced Jew named Paul Debevec, via his fake 2006 ‘research papers’ which strangely don’t exist at all when searched for within these authentic academic papers I am sharing here.

Based upon these authentic academic papers - which were all sourced from Hao Li’s personal portfolio site, it’s safe to say that Hao Li was most likely not let in on, or made aware of the massive fraud operation being waged. Regardless, the question becomes “How much true history can they actually alter and delete to support their fraud?”

The papers shared on Hao Li’s personal website are essentially his life’s work - it would be the same as a completely false historical record being created that directly contradicted my own personal portfolio webpage and life’s work. In order to truly delete every bit of ‘authentic’ history they would need to either delete or alter the authentic body of work that Hao Li obviously takes great pride in, as it’s what defines his entire career and education - just as the patent I filed - which they are attempting to steal from me through their massive fraud operation, serves as the crowning achievement of all of my life’s work - a ‘life’ which these psychopath’s are trying to outright destroy through blatant lies, and a concerted effort to discredit me within the courts by pretending that all of my ‘perceived achievements’ and my belief that I am ‘an engineer’, and have ‘invented a revolutionary technology’ is nothing more than delusions which are the result of my supposed ‘psychotic disorder’ - the one that I need to be forced to take powerful antipsychotic drugs for in order to properly deal with…so that I can become ‘competent’ again.

I have a chronological timeline of high-profile, successful projects spanning 2008 - 2020 on my website MattGuertin.com.

I then top ALL of my previous work by thinking up a ‘breakthrough’, ‘disruptive’ idea during early 2021 - I file a provisional patent for it, and Netflix files a duplicate one just 12 days after me.

I bust my ass for two years and am JUST about to be able to film a demo for my idea after spending the previous two years designing, engineering, and building a prototype - as well as the company that would launch with it - AND THEN ?

..and then I spend the following two years declared as ‘psychotic’ and ‘incompetent’ as I am granted a patent, continue filing patents, trademarks, and try to move my business forward only to realize the full scope of what is taking place at which point I’ve done nothing but fight back against their lies ever since.

Do you see how that works? There is no ‘Constitutional Rights’ - they have been holding me hostage for over two years at this point through their weaponized mental health system. If I was ‘incompetent’ they would’ve already succeeded in disappearing me (literally..) over a year ago - as they clearly intended.

THEY HAVE STOLEN FOUR YEARS OF MY LIFE AT THIS POINT - along with the previous tweny years of hard work, and time I spent learning, and becoming self-educated.

https://arxiv.org/pdf/1612.00523v1

Paul Debevec’s light stage research has always been focused on capturing the human face. It has NEVER involved capturing the full, human body, as portrayed in his fraudulent 2006 academic papers

What I have uncovered breaks the entire illusion of our supposed free and democratic government institutions. This obviously includes the USPTO as well -

It is all BULLSHIT

The most ironic part about all of these official court documents is that the entire federal case only exists in the first place due to the fact that they couldn’t help themselves from stealing someone elses patented technology. It is this same exact, already stolen technology that they are now re-purposing, and using to steal mine as well.

‘Our’ government, and ‘our’ miltary clearly don’t exist to protect the American people. Left is right. Up is down. Light is dark. Lies are truth.

All they have is LIES

They didn’t create anything-

They’ve stolen, and deceived.

I created the above video edit from the following YouTube source video - which is more fraudulent, back-dated content -

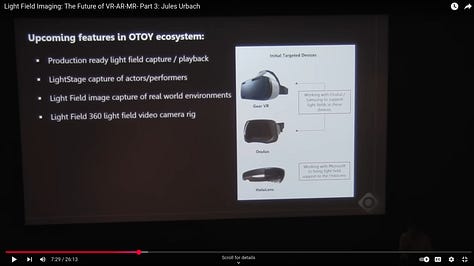

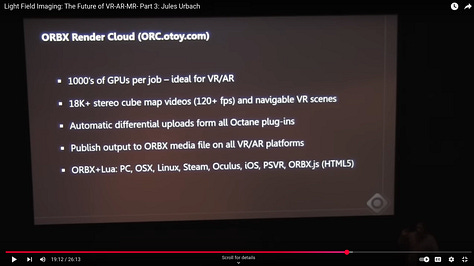

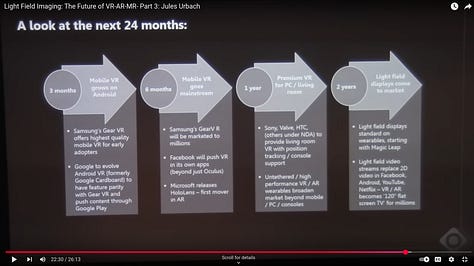

OTOY | GTC 2013 - The Convergence of Cinema and Games: From Performance Capture to Final Render - https://www.youtube.com/watch?v=etoS6daj20c

https://home.otoy.com/advisory-board/

”We have to be very careful what we say…”

“Again…I have to be very careful what I say”

“I have to be really careful what I say now…HE CAN’T SAY ANYTHING!”

IRREFUTABLE PROOF that ‘Part 3’ of this fraudulent, 4 part conference, was created in 2023 by ‘Google Inc.’ and then back-dated by YouTube

Skip ahead to the 11:00 mark of this Rumble video I recorded…..play in 4k

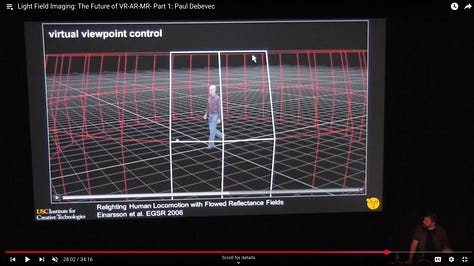

The entire focus, and the main angle of all of their fraud is ‘Light Fields’ - with ‘Light Fields’ being defined by a mathematical calculation called the ‘plenoptic function’. This is why they have Jon Karafin (the short one in the suit-coat who has to ‘be very careful’ what he says) included as part of their back-dated conference. They are attempting to pre-date the entire field of research pertaining to ‘light fields’ as it is this technology that they are currently implementing with my VR treadmill technology that they stole out of the USPTO. Light field capturing, and displays, combined with my stolen, patented technology is what finally makes a true ‘holodeck’ reality - as in the ability of a user to traverse through an infinitely vast, 3D, holographic environment that smells, looks, and feels nearly imperceptible from reality.

THIS IS THE ENTIRE REASON “THEY HAVE TO BE VERY CAREFUL” ABOUT WHAT THEY SAY

Because they don’t want to accidentally mention technology that didn’t exist yet in 2015…

..with 2015 being the supposed year that this conference took place. In reality YouTube simply back-dated this recently filmed ‘conference’ to the false year of 2015 - just as they have been doing now for a TON of additional fraudulent content being used to support their MASSIVE criminal conspiracy targeting the theft of my breakthrough VR treadmill technology that is worth BILLIONS of dollars over its 20 year patent life.

Just look at the in-depth analysis I conducted of their fraudulent, 4-part conference. I downloaded all four of the YouTube videos and ran them through Ai to get a text transcript of exactly ‘what’ they were talking about, and then input the transcripts to chatGPT, along with my patent in order to have it output an extremely detailed, and fact-based analysis of the specific ways that this fraudulent conference was being used to target my patent.

For Part 2 featuring Mark Bolas, they even inserted an exact duplicate of my patented technology as a stop-motion animation demo towards the end, and he also goes into great detail discussing the exact methodology of my granted patent disclosure, and claims insofar as simply rotating the person to match the virtual scene in order to create the illusion of the static camera orbiting around the person within the scene.

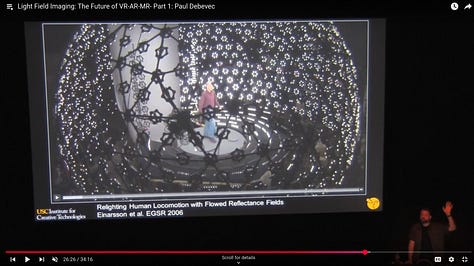

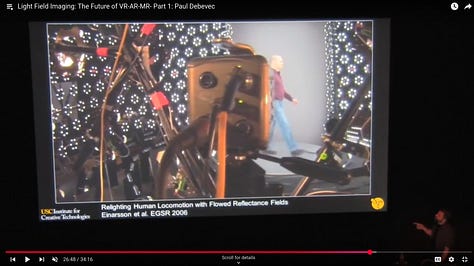

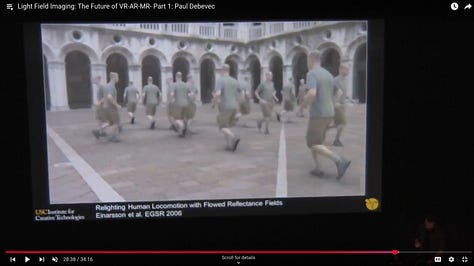

Additionally there is a concerted focus on the entire topic of someone being able to walk through a virtual environment. Take note of the fact that in Part 1, featuring Paul Debevec (guy with big, fat face) that all he has to show for his supposed 2006 research in which he supposedly already created mine, and Eyeline Studios patented technology in 2006, is the exact same content nearly ten years after the initial research supposedly occurred. With the supposed 2006 video content irrefutably proven to have been created using AI.

So the exact same, Ai generated video content of ‘Bruce’ on the treadmill - Which means that his brilliant technology never organically advanced whatsoever, and must’ve simply been packed up and put on a shelf shortly after (if we are to believe his lies that is..). In addition there was never any patent filed for his rotating treadmill, even though one of the supposed academic papers was re-packaged with a focus on its ‘vast implications in military training simulations’, with Paul Debevec’s research at USC actually being directly funded by the US Army / Government / Military. If this sounds believable to you then just wait until you meet everyone’s favorite Ai generated, holographic holocaust survivor, Pinchas Gutter, which is also part of their little bundle of Hollywood trickery and illusions…

Visual Effects Society | 4 Part Conference BACKDATED FRAUD

Be careful…..shhhhhhh. Don’t want to accidentally mention current technology that foils your backdated FRAUD.

Light Stage 6 | EXACT Same Technology Since 2006...

Not only does the video from 2018 reveal that it’s the exact same controller units, in the exact same position but apparently they never even had to take down any lights and do any re-configuring or major updates as the lights are all still rotated to the exact same positions even though as we can clearly see in the rear close-up’s of the actual lightin…

Out of all of these authentic academic papers I downloaded from Hoi Li’s website the term ‘plenoptic function’ (‘plenoptic function’ = ‘Light Fields’) only appears in a total of three of the papers.

Those being the following -

In the authentic academic research paper that contains the term ‘plenoptic’ and was published on December 17th, 2015 we are able to gain some insight into the current state of the art of light field research -

ZERO MENTION ^ ^ ^ of Paul Debevec’s supposed light field research…

Paul Debevec’s fraudulent 2006 academic research paper ‘Virtual Cinematography: Relighting through Computation’ on the other hand contains 14 different instances of the term ‘plenoptic function’

However, this term is NEVER used a single time in ANY of his three light stage patents spanning from 2006 to 2015…

It is this paper that contains the exact same, Ai generated images of ‘Bruce’ that we see over and over again in ALL presentations spanning from 2006, all the way up until 2023, when we end up seeing the exact same content featured in Paul Debevec’s fraudulent SIGGRAPH presentation on behalf of Netflix, and Eyeline Studios.

This means that apparently over a span of 17 YEARS Paul Debevec’s supposed 2006 research made ZERO advancements of any kind, as we NEVER see any images or video besides that which is purported to have originated in 2006 during the initial research.

…and even though Paul Debevec was supposedly light years ahead of the curve insofar as his supposed research into capturing not only the human face, but the entire human body, in addition to publishing a paper that delves into the topic of ‘light fields’ so in depth, that the term ‘plenoptic function’ is mentioned on 14 separate occasions - WE DO NOT SEE ANY MENTION OF HIS CLAIMED WORK IN THESE AUTHENTIC ACADEMIC PAPERS EVEN THOUGH THE AUTHOR IS NOT ONLY RESEARCHING THE EXACT SAME FIELDS OF RESEARCH, BUT IN FACT WORKS WITH, AND ALONGSIDE PAUL DEBEVEC UNDER THE SAME ROOF - With Hao Li and Paul Debevec actually being listed as joint researchers on multiple research papers shared on Hao Li’s personal website.

Meanwhile - even though there was a research paper published just one month AFTER the supposed 4 part VES ‘conference’ which clearly states that light field capture and display technology is still in its infancy during this time we have our magical time-travelers, Paul Debevec, Jules Urbach, Jon Karafin, and Mark Bolas holding a mutli-hour long conference in which they are not only focused on, and discussing topics of light field capture and displays which are way beyond the current state of the art during this time - but they are also sharing videos of advanced light field capture methods, and even show off an advanced light field capture device that appears to be a fully functional, ready-for-market consumer product.

Not to mention their advanced light field capture rig they also show off…

But not only are they years ahead of the current state of light field research - as clearly supported in the analysis I conducted - BUT - We once again are graced with another one of Paul Debevec’s ground-breaking presentations in which he still showing off the same 9 year old video footage of his ‘revolutionary rotating treadmill’ research in which he was able paradoxically implement the very same, advanced light field research 9 years ahead of their current time-traveled, back-dated ‘conference’.

Paul Debevec’s masterful skills in the art of deception, and Ai generated lies have granted him an ability to see many years into the future

OK..OK..OK…

So if all of Paul Debevec’s supposed 2006 ‘academic research’ is in fact all a complete fraud just as I have been claiming then the obvious question one might logically arrive at is -

“So then what was this lying, piece of shit, Paul Debevec, actually doing around this time period if his entire life is one big lie?”

I just so happen to have the answer for you! :-)

https://www.mova.com/pdf/Hollywood_Rep_advances_sigg.pdf

Paul Debevec was working as the festival chair of SIGGRAPH 2007 where he was busy conducting interviews to discuss the unveiling of Steve Perlman’s new ‘MOVA Contour’ facial capture technology - the very same technology that is now being credited to Paul Debevec and his supposed full-sized ‘Light Stage 6’ system that had already existed for a year at the time this interview took place, and had somehow already achieved the very same technological marvel that Paul Debevec, and many others in this article state STILL HAS NOT OCCURRED.

IT’S ALL LIES.

Said festival chair Paul Debevec: “To capture a human performance and bring it into the digital world in a way that represents the acting — we are just on the threshold of showing that it is possible.”

In addition, director David Fincher and Digital Domain are known to have been examining capture methods for production of “The Curious Case of Benjamin Button,” which would show Brad Pitt aging in reverse.

In the case of CG humans, there already have been successes, largely for stunt doubles or performances where the use of a live actor would not be practical or possible. But for actual acting — like sitting at a table having a conversation — most agree that the industry is not there yet.

In some cases, these efforts have resulted in an area known as the “uncanny valley,” the point where the CG human is realistic but not quite right, introducing a perceptual zone known to cause a dip in empathy from an audience. VFX pros agree that the industry is getting closer to overcoming this hurdle, but- Digital Domain vp advanced strategy Kim Libreri believes it remains a year or two away.

From a technical standpoint, the removal of markers from the motion-capture process is one direction innovation is heading.

Organic Motion is readying a markerless body motion capture system that uses a mocap stage. “We use new types of computer vision to track and digitize the human shape without the need to attach markers or any types of devices,” Organic Motion founder and CEO Andrew Tschesnok said.

Mean- while, Image Metrics is developing technology to enable markerless facial animation by capturing data directly from video. Mova will demonstrate techniques at Siggraph with motion capture system developer Vicon.

ILM’s iMo-Cap, for instance, allows the actors to perform on set or on location while a director is shooting the scene. “It’s not about technology; it’s about the creative process,” ILM’s Michael Sanders said.

Why in the world didn’t Paul Debevec speak up and let all of these people know that he already succeeded one year earlier, in achieving that which all of these movie producers are yearning for so badly?

BECAUSE IT’S ALL Ai GENERATED FRAUD !

Just like Paul Debevec’s fraudulent 2023 SIGGRAPH video in which he made sure not to disclose his direct SIGGRAPH affiliations at all in the video…

The very same ‘prestigious’ SIGGRAPH that’s obviously been a willing participant in helping to distribute completely fraudulent academic papers across it’s archived body of legitimate research papers.

In fact all of their fraud and lies are revealed in a rather irrefutable and compelling manner in many of the additional court filing submitted into the ‘Rearden LLC v Disney’ federal case..

As an example, it actually turns out that the MOVA Contour system was introduced at the exact same time that Paul Debevec’s claimed 2006 research also was -

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347.1.0.pdf

at which point it was then used to produce ‘The Curious Case of Benjamin Button’, and ‘The Avengers’, among many others.

Yet for some reason it is now being portrayed that many of these same films were instead produced using Paul Debevec’s research, along with USC’s ‘Light Stage 6’ system.

https://www.npr.org/2009/02/17/100668766/building-the-curious-faces-of-benjamin-button-npr/

And what about the movie ‘Maleficent’ as well as ‘Benjamin Button’, as claimed in this official ‘USC Viterbi Magazine’ article prominently featuring Barack Obama?

“The Light Stage system has been used extensively in Hollywood to scan actors for their virtual roles in blockbusters including “Avatar,” “The Curious Case of Benjamin Button” and “Maleficent.” In 2010, Debevec and his collaborators received a Scientific and Engineering Academy Award in recognition of their contribution to visual effects via the development of the Light Stage.”

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347.1.0.pdf

Looks like more fraud and lies if you ask me. Based on this, it also appears that USC is directly involved in supporting the criminal conspiracy taking place…

“Last June, USC Institute for Creative Technologies (ICT) Chief Visual Officer Paul Debevec traveled to the White House to join a Smithsonian Institution-led team in creating the first-ever 3-D portrait and 3-D-printed bust of a U.S. president.”

I can’t believe that they would just involve Obama in their criminal conspiracy as well. I bet he’s gonna be pissed when he discovers that they used him as a pawn to help facilitate their fraudulent, criminal bullshit…

“Debevec and ICT have also used the Light Stage in collaboration with the U.S. Army Research Lab to create realistic virtual characters for immersive military training environments. The Army has funded much of the research underlying the development of the Light Stage systems.”

Although the US Army is directly involved in helping to carry out the theft of my patent through their hosting, and promotion of USC-ICT’s 2006 fraudulent academic papers - so who really knows at this point. Right?

It’s not like my revolutionary VR treadmill patent has any military simulation training use cases..

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.139.7.pdf

FEDERAL FUNDING ($8,892,055)

U.S. Government

Project Nexus: Lifelike Digital Human Replica

Duration: 09/01/2018 – 08/31/2019

Award Amount: $1,000,000

Role: PI (USC/ICT)

Army Research Office (ARO)

RTO: Scalable and Efficient Light Stage Pipeline for High-Fidelity Face Digitization

Duration: 09/01/2018 – 08/31/2019

Award Amount: $200,000

Role: PI (USC/ICT)

U.S. Army Natick (NATICK)

High-Fidelity Rigging and Shading of Virtual Soldiers

Duration: 09/01/2018 – 03/31/2019

Award Amount: $157,500

Role: PI (USC/ICT)

Office of Naval Research (ONR - HPTE)

Young Investigator Program (YIP): Complete Human Digitization and Unconstrained Performance Capture

Duration: 06/01/2018 - 05/31/2021

Award Amount: $591,509

Role: PI (USC)

Semiconductor Research Corporation (SRC) / Defense Advanced Research Projects Agency (DARPA)

JUMP: Computing On Network Infrastructure for Pervasive, Cognition, and Action

Duration: 01/01/2018 - 12/31/2022

Award Amount: $1,174,818

Role: PI (USC)

Army Research Office (ARO)

UARC 6.1/6.2: Avatar Digitization & Immersive Communication Using Deep Learning

Duration: 11/01/2017 - 10/31/2019

Award Amount: $2,821,000

Role: PI (USC/ICT)

Army Research Office (ARO)

RTO: Strip-Based Hair Modeling Using Virtual Reality

Duration: 11/01/2017 - 10/31/2018

Award Amount: $250,000

Role: PI (USC/ICT)

Army Research Office (ARO)

RTO: Head-Mounted Facial Capture & Rendering for Augmented Reality

Duration: 11/01/2017 - 10/31/2018

Award Amount: $200,000

Role: PI (USC/ICT)

Army Research Office (ARO)

UARC 6.1/6.2: Capture, Rendering, & Display for Virtual Humans

Duration: 11/01/2016 - 10/31/2017

Award Amount: $1,408,011

Role: PI (USC/ICT)

United States SHARP Academy (ARO)

Digital SHARP Survivor

Duration: 07/01/2016 - 06/31/2017

Award Amount: $94,953

Role: PI (USC/ICT)

Army Research Office (ARO)

RTO: Lighting Reproduction for RGB Camouflage

Duration: 01/01/2016 - 12/31/2017

Award Amount: $200,000

Role: PI (USC/ICT)

U.S. Army Natick (NATICK)

Research Contract

Duration: 09/01/2015 - 12/31/2016

Award Amount: $145,000

Role: PI (USC/ICT)

Office of Naval Research (ONR)

Markerless Performance Capture for Automated Functional Movement Screening

Duration: 08/01/2015 - 09/30/2017

Award Amount: $230,000

Role: PI (USC)

Intelligence Advanced Research Projects Activity (IARPA), Department of Defense (DoD)

GLAIVE: Graphics and Learning Aided Vision Engine for Janus

Duration: 07/25/2014 - 07/24/2018

Award Amount: $419,264

Role: Co-PI (USC)

Ok….so perhaps there’s a little bit of interest from the military…and maybe there’s a couple searches for my LinkedIn page carried out directly by USC itself.

This could all just be a coincidence obviously…or possibly just the result of the supposed ‘unknown schizophrenia or other psychotic disorder’ I’ve been diagnosed as suffering from as a result of my unfounded belief that I have a bunch of psychotic, incompetent, and mentally deficient pieces of shit stalking me, that I was the subject of an illegal surveillance operation via my self-professed ‘former CIA’ welder, that all of my electronic devices were hacked into, my phone calls were being re-routed, advanced Ai was being utilized, and my belief that my life was in grave danger after suddenly realizing that my computer was still being accessed over Bluetooth somehow even though I had unplugged my ethernet cord, and disabled my Wi-Fi adapter following my relentless collection of digital forensic evidence for the previous six weeks after figuring out that there were a bunch of fake websites being created that mirrored the technology I had just been granted a patent notice of allowance for, and had been working on non-stop for the previous two years, only to then stumble upon a duplicate patent application filed just 12 days after mine for the exact same technology which had already been purchased by Netflix for at least 100 million dollars.

Surely there can’t be any more compelling LinkedIn Search ‘coincidences’ like this…..can there? I mean it’s not like my patent is worth billions of dollars or something. It’s just a cool idea I thought up in my living room one afternoon.

Ok…so there’s also a few massive financial entities searching for my unused LinkedIn page as well.

At least I don’t have any huge companies that are directly connected to the entertainment world searching for me at least….right?

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.701.43.pdf

https://www.mova.com/pdf/070806-SIGGRAPH-Zoetrope_FINAL.pdf

https://www.wired.com/2007/10/st-3d/

WME = William Moris Endeavor = Ariel Emanuel

Are you starting to figure out what is taking place yet…?

Are those dots starting to connect?

Ok…that’s enough. I think you get the point..

I guess if you really want to see the complete absurdity you can just take a look at the complete ‘LinkedIn Search Graph’ I created many months AFTER receiving my criminal charges, as a result of my desperate call for help one quiet, Saturday afternoon…

LinkedIn Search & Count Graph | Event Timeline

I have not been on Facebook at all ever since I deactivated my account back in 2017 and was not on social media at all for pretty much the entire time I was working on building my company as well as the prototype of the rotating treadmill.

It’s all coming unraveled, and their lies are being exposed.

FUCK THESE PEOPLE

This is just one small piece of the puzzle - there is much more coming soon.

Stay tuned..

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.139.0.pdf

And here is Hao Li’s declaration, as mentioned above -

Industry Funding ($3,140,938)

Softbank Corp.

3D Modeled, Rigged, and Animated Characters from 2D Video

Duration: 01/01/2019 – 01/01/2020

Award Amount: $111,534

Role: Co-PI (USC)

Snap Inc.

Research Gift Donation

Date: 10/29/2018

Award Amount: $20,000

Role: PI (USC)

TOEI Company, Ltd.

Research Contract

Duration: 06/01/2018 – 03/01/2019

Award Amount: $580,000

Role: PI (USC/ICT)

Lightstage, LLC / Otoy <~~~~~~~~~~~ Lightstage = OTOY OTOY = Lightstage

Research Contract

Duration: 05/15/2018 – 12/31/2018

Award Amount: $152,000

Role: PI (USC/ICT)

https://www.lightfieldlab.com/

Sony Corporation

Highly Sparse Volumetric Capture Using Deep Learning

Duration: 05/01/2018 - 04/31/2019

Award Amount: $120,000

Role: PI (USC)

Sony Corporation

Geometry and Appearance Synthesis for 3D Human Performance Capture

Duration: 05/01/2017 - 04/31/2018

Award Amount: $120,000

Role: PI (USC)

Adobe Systems Inc.

Research Gift Donation

Date: 08/09/2017

Award Amount: $20,000

Role: PI (USC)

Mediafront Inc.

Research Contract

Date: 06/28/2017

Award Amount: $38,095

Role: PI (USC/ICT)

Activision Publishing Inc.

Research Contract

Date: 05/09/2017

Award Amount: $21,593

Role: PI (USC/ICT)

Electronic Arts Inc.

Research Contract

Duration: 12/01/2016 - 12/01/2018

Award Amount: $460,000

Role: PI (USC/ICT)

SOOVII Digital Media Technology, Ltd

Research Contract

Date: 11/01/2016

Award Amount: $1,080,000

Role: PI (USC/ICT)

RL Leaders, LLC

Research Contract

Date: 10/01/2016

Award Amount: $630,216

Role: PI (USC/ICT)

Sony Corporation

Shape and Reflectance Estimation via Polarization Analysis

Duration: 08/12/2016 - 08/23/2017

Award Amount: $50,000

Role: PI (USC/ICT)

Adobe Systems Inc.

Research Gift Donation

Date: 01/07/2016

Award Amount: $10,000

Role: PI (USC)

Sony Corporation

Unconstrained Dynamic Shape Capture

Duration: 11/01/2015 - 10/31/2016

Award Amount: $123,500

Role: PI (USC)

Facebook / Oculus

Facebook Award

Date: 10/14/2015

Award Amount: $25,000

Role: PI (USC)

Huawei

Development of a 3D Hair Database

Date: 09/01/2015

Award Amount: $50,000

Role: PI (USC)

Okawa Foundation

Okawa Foundation Award

Date: 10/08/2015

Award Amount: $10,000

Role: PI (USC)

Adobe Systems Inc.

Research Gift Donation

Date: 04/27/2015

Award Amount: $9,000

Role: PI (USC)

Embodee Corporation

Research Gift Donation

Date: 03/17/2015

Award Amount: $70,000

Role: PI (USC)

Google

Google Faculty Research Award:

Data-Driven Framework for Unified Face and Hair Digitization

Date: 02/12/2015

Award Amount: $52,000

Role: PI (USC)

Facebook / Oculus

Facebook Award

Date: 02/03/2015

Award Amount: $25,000

Role: PI (USC)

Panasonic Corporation

Markerless Real-Time Facial Performance Capture

Date: 09/22/2014

Award Amount: $20,000

Role: PI (USC)

Pelican Imaging Corporation

Research Gift Donation

Date: 07/22/2014

Award Amount: $50,000

Role: PI (USC)

Innored Inc.

Research Gift Donation

Date: 11/01/2013

Award Amount: $25,000

Role: PI (USC)

Light Fields and Google AR (more back-dated, YouTube FRAUD..)

Any of these names look familiar ?

University Funding ($856,166)

USC Shoah Foundation Institute

New Dimensions in Testimony

Duration: 05/01/2016 - 09/31/2017

Award Amount: $625,266

Role: PI (USC/ICT)

University of Southern California

Andrew and Erna Viterbi Early Career Chair

Start Date: 08/16/2015

Award Amount: $20,000 (to date)

Role: PI (USC)

University of Southern California - Integrated Media System Center (IMSC)

IMSC Award

Duration: 07/01/2013 - 06/30/2014

Award Amount: $11,000

Role: PI (USC)

University of Southern California

USC Start-up Funding

Start Date: 09/01/2013

Award Amount: $199,900

Role: PI (USC)

Invited Talks

(select list based on the host / topic)

COMPLETE 3D HUMAN DIGITIZATION

Speaker, ONR HPT&E Technical Review: Warrior Resilience 2019, Orlando Science Center, Orlando, 02/2019

AI AND HUMAN DIGITIZATION: WHEN SEEING IS NOT BELIEVING?

Speaker, DARPA MediFor PI Meeting 2019, DARPA Conference Center, Arlington, 02/2019

PHOTOREALISTIC HUMAN DIGITIZATION AND RENDERING USING DEEP LEARNING

Invited Talk, Sony Corporation, Tokyo, 12/2018

Speaker, US Army TRADOC Workshop 2018, Los Angeles, 08/2018

CAPTURE, RENDERING, AND DISPLAY FOR VIRTUAL HUMANS

Speaker, UARC ICT Mission Projects 2017, Los Angeles, 02/2017

MARKERLESS MOTION CAPTURE

Speaker, Human Performance, Training & Education Tech Review, Quantico US Marine Corps Base, Stafford County, 10/2016

DIGITIZING HUMANS INTO VR USING DEEP LEARNING

Speaker, NVidia Deep Learning Workshop, Los Angeles, 02/2016

MARKERLESS PERFORMANCE CAPTURE FOR AUTOMATED FUNCTIONAL MOVEMENT SYSTEM

Speaker, Warrior Resilience Tech Review, Office of Naval Research, Arlington, 02/2016

HUMAN DIGITIZATION AND FACIAL PERFORMANCE CAPTURE FOR SOCIAL INTERACTIONS IN VR

Invited Talk, Google, Seattle, 10/2015

Invited Talk, Disney Consumer Products, Glendale, 07/2015

DEMOCRATIZING 3D HUMAN CAPTURE: GETTING HAIRY!

Invited Talk, Google, Mountain View, 09/2015

HUMAN CAPTURE WITH DEPTH SENSORS

Chalk Talk, Weta Digital, Wellington, 07/2014

LOW-IMPACT HUMAN DIGITIZATION AND PERFORMANCE CAPTURE

Invited Talk, Dreamworks Animation, Glendale, 08/2013

DYNAMIC SHAPE RECONSTRUCTION AND TRACKING

R&D Forum, Industrial Light & Magic, Letterman Digital Arts Center, San Francisco, 04/2012

GEOMETRIC CAPTURE OF HUMAN PERFORMANCES

Chalk Talk, Digital Domain, Venice, 03/2012

DYNAMIC SHAPE CAPTURE WITH APPLICATIONS IN ART AND SCIENCES

Invited Talk, Microsoft, Redmond, 11/2011

GENERATING BLENDSHAPES FROM EXAMPLES AND CAPTURING WATERTIGHT HUMAN PERFORMANCES

R&D Seminar, Industrial Light & Magic, Letterman Digital Arts Center, San Francisco, 08/2010

A PRACTICAL FACIAL ANIMATION SYSTEM: FROM CAPTURE TO RETARGETING

Research Seminar, Pixar Animation Studios, Emeryville, 08/2010

DEFORMING GEOMETRY RECONSTRUCTION AND LIVE FACIAL PUPPETRY

R&D Seminar, Industrial Light & Magic, Letterman Digital Arts Center, San Francisco, 10/2009

Oscars SciTech Awards Hit byVisual Effects Credit Dispute - Hollywood Reporter, February 6, 2015

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.696.2.pdf

Digital Replicas May Change Face of Films - WSJ, July 31, 2006

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.696.3.pdf

Hollywood Legal Battle Erupts Over Facial Animation Technology - The New York Times, February 24, 2016

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.696.9.pdf

Camera System Creates Sophisticated 3-D Effects - The New York Times, July 31, 2006

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.696.12.pdf

American Cinematographer - Contour Reality Capture - New Products and Services, September 2006

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.696.13.pdf

Beauty and The Beast Filming Images Using MOVA Contour

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.698.1.pdf

MOVA / Rearden LLC IP Portfolio - Patents - Trademarks

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.700.4.pdf

Email discussing MOVA, Lightstage, and Disney Medusa Facial Capture

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.701.16.pdf

MOVA LLC - John Carter on Mars - Service Agreement - Double Negative Visual Effects

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.701.41.pdf

MOVA LLC - The Hulk - Service Agreement - Capture session location Burbank, Gentle Giant Studios (p. 11)

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.701.43.pdf

MOVA LLC Service Agreement- TRON 2 - Digital Domain / Walt Disney

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.701.44.pdf

MOVA LLC Service Agreement - Marvels The Avengers - Digital Domain

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.702.1.pdf

TRON 2 - Digital Domain Agreement

https://storage.courtlistener.com/recap/gov.uscourts.cand.314347/gov.uscourts.cand.314347.702.2.pdf

"ChatGPT is, in technical terms, a 'bullshit generator'. If a generated sentence makes sense to you, the reader, it means the mathematical model has made sufficiently good guess to pass your sense-making filter. The language model has no idea what it's talking about because it has no idea about anything at all. It's more of a bullshitter than the most egregious egoist you'll ever meet, producing baseless assertions with unfailing confidence because that's what it's designed to do."

— We come to bury ChatGPT, not to praise it. - https://www.danmcquillan.org/chatgpt.html